Large Language Models (LLM)

Essentially: "VERY fancy auto-complete"

Caveman with Rock : Orchestral Symphony :: Autocomplete : LLM

Prerequisite: Have the computing infrastructure and research budget equivalent to a medium-sized country.

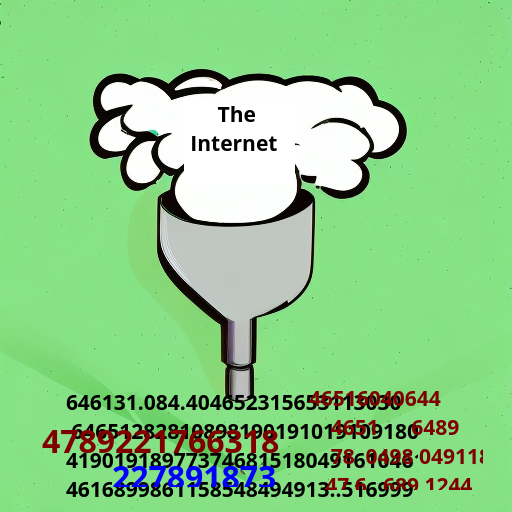

Step 1: Absorb all human text and turn it into numbers

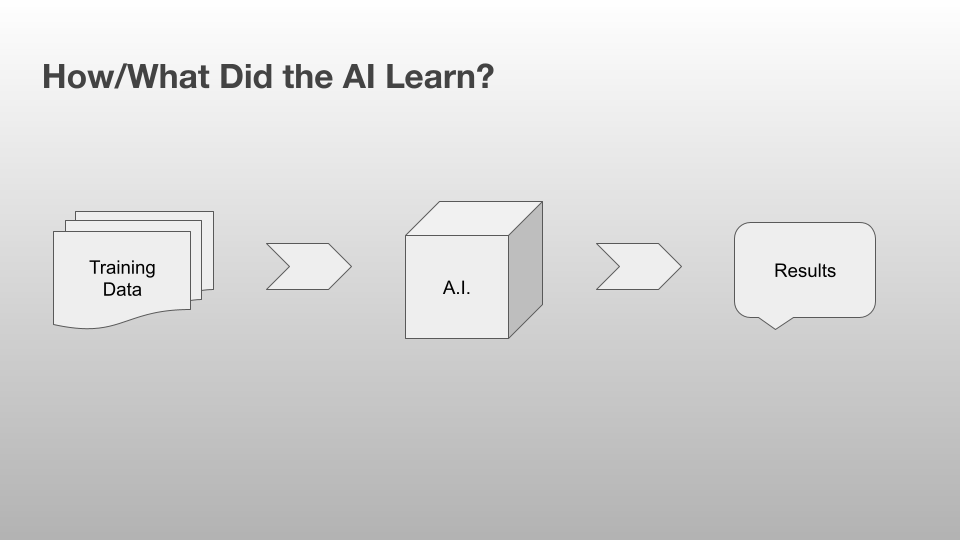

Step 2: Run complex mathematical formula on the numbers to learn how human text "works"

Step 3: "Train" or "teach" computer programs how to understand the relationships between the numbers (words)

Step 4: Have low-paid humans oversee the computer "training" and "grade" the computers' progress

Step 5: Turn your question into numbers, analyze those numbers with respect to all the other numbers, predict the "answer" numbers.

Step 6: Take the final "answer" numbers and turn them back into language.

Wait a minute: ALL human text?

LLM Training Corpora:

- CommonCrawl (public Internet scrape)

- Wikipedia

- Reddit (GPT-2)

- Pubmed

- Github

- Gutenberg

- ArXiv

- "Books1" or "Books2" or "Books3"? (GPT-3 and ThePile)

-- https://stanford-cs324.github.io/winter2022/lectures/data/

Wait, "low-paid humans?"

https://time.com/6247678/openai-chatgpt-kenya-workers/

https://gizmodo.com/chatgpt-openai-ai-contractors-15-dollars-per-hour-1850415474

More Technical Info:

https://news.ycombinator.com/item?id=35977891

https://www.microsoft.com/en-us/research/blog/ai-explainer-foundation-models-and-the-next-era-of-ai/

https://www.reuters.com/technology/what-is-generative-ai-technology-behind-openais-chatgpt-2023-03-17/

https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-generative-ai

https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-ai

https://en.wikipedia.org/wiki/Transformer_(machine_learning_model)